Machine Learning (3)

Social data analytics has recently gained esteem in predicting the future outcomes of important events like major political elections and box-office movie revenues. Related actions such as tweeting, liking, and commenting can provide valuable insights about consumer’s attention to a product or service. Such an information venue presents an interesting opportunity to harness data from various social media outlets and generate specific predictions for public acceptance and valuation of new products and brands. This new technology based on gauging consumer interest via the analysis of social media content provides a new and vital tool to the sales team to predict sales numbers with a great deal of accuracy.

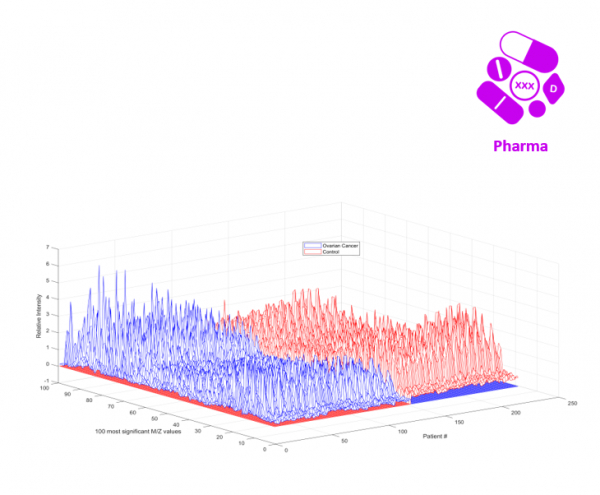

The current business model of pharmaceutical industry where a new drug may take a decade and Billions of dollars to develop is no longer viable in this digital era of big data and cloud computing. Giant IT companies such as Amazon and Google are leveraging their deep pockets and strong AI footprints to lower the entry barrier to this vital sector and render classical models of drug discovery and development obsolete.

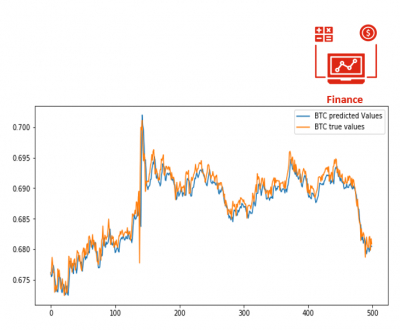

A cryptocurrency is a digital currency that is self-organized, whose value is determined mainly by social consensus. In addition to being decentralized, a cryptocurrency is not backed by any third party or central bank. Essentially, cryptocurrencies are limited entries in a database, not controlled by anyone but by the network itself, which is unchangeable unless specific conditions are fulfilled. All these conditions make cryptocurrency prices unpredictable and constantly fluctuating.